Non container applications

There are so many benefits to schedulers and how they can help provide benefits to container based workloads. Without espousing the pain points associated with an application transformation there quite simply are a number of workloads out there that are traditional. Application processes like Java, .NET, and other runtimes that aren’t deemed appropriate for containers or simply, too expensive to touch.

Enter HashiCorp Nomad. Nomad brings the benefits promised post digital transformation that containers and microservices get to runtimes such as Java and .Net. Nomad’s Driver functionality allows me to select a runtime amongst other things to schedule. Java, .NET, Docker, local and raw-exec just to name a few. So with this in mind it is time to see what I can get for my existing Java app. Now to figure out what to run.

A non trivial Java application

Minecraft. Quite frankly works well as a test application as it provides us with a Server / Client architecture pattern. This is common with traditional application workloads surrounding communication patterns and how clients connect directly with the server or simply to a load balancer talking to the server.

Minecraft server is free and comes simply bundled as server.jar. This can run on macOS, Linux, or Windows and allows hosting of virtual worlds that allow the java client for Minecraft to connect. For those following along at home, if you do not have the Minecraft Java client, it does cost 35 Australian Dollerydoos. This translates to around 1 USD.

Scheduling Java and breaking down a sample job file

The entire job file for this deployment can be found here. Lets talk about some of the major parts of this particular Nomad jobs.

Resources

Time to check out my resources required

task "minecraft" {

resources {

cpu = 800

memory = 900

disk = 2000

network {

port "access" {

static = 25565

}

}

The resources stanza allows for reservation of certain resources. This include memory, cpu, and disk. When a job is submitted to the Nomad server cluster it is forward to the Evaluation Broker. The broker will then take the job and begin processing it based on the definitions in the job file. Below is a gross oversimplification of the process:

- Identity nodes with available resources for the job

- Rank the nodes based on existing job and bin packing

- Select the highest ranking node and create an allocation plan

- Submit the allocation plan to leader

Once plan is submitted it will create the allocation where no conflict of requirements will be met. So we can ensure the right amount of resources.

Artifacts

Artifacts provides the ability to retrieve, unpack, and validate remote archives or files. This allows the acquisition of http, https, git, hg and S3 files and if they’re an archive they are automatically unarchived.

artifact {

source = "https://raw.githubusercontent.com/pandom/cloud-nomad/master/minecraft/common/eula.txt"

mode = "file"

destination = "eula.txt"

}

artifact {

source = "https://launcher.mojang.com/v1/objects/bb2b6b1aefcd70dfd1892149ac3a215f6c636b07/server.jar"

mode = "file"

destination = "server.jar"

}

Here I also I get the eula.txt from a remote repo. This is one approach to getting a file. This is required for server.jar to run to ensure the server is run in agreement to the eula defined by Mojang.

Java Driver

The Java driver is a Task Driver plugin that allows Nomad to schedule .JAR files. An application owner, does not need to refactor how their application runs, what form factor it is, or re-platform it. It allows for the passing of jar_path and jvm_options to the scheduled Java application.

Accessing logs and underlying filesystem

Now for some routine validation and poking around with Nomad.

To grab the allocation id run nomad job status -all-allocs minecraft and then use the allocation id for nomad alloc status <allocid>.

Filesystem

It is possible to get a feel for the filesystem that underpins the Java allocation we have been given. Retrieve the allocation id - in our case 6de4ea36 and lets explore the FS.

nomad alloc fs -H 6de4ea36 minecraft

Mode Size Modified Time Name

drwxrwxrwx 4096 2020-04-03T02:05:29Z alloc/

-rw-r--r-- 2 2020-04-03T02:05:35Z banned-ips.json

-rw-r--r-- 2 2020-04-03T02:05:35Z banned-players.json

drwxr-xr-x 4096 2020-04-03T02:05:25Z bin/

drwxr-xr-x 4096 2020-04-03T02:05:29Z dev/

drwxr-xr-x 4096 2020-04-03T02:05:25Z etc/

-rw-r--r-- 10 2020-04-03T02:05:26Z eula.txt

-rw-r--r-- 373 2020-04-03T02:05:29Z executor.out

drwxr-xr-x 4096 2020-04-03T02:05:25Z lib/

drwxr-xr-x 4096 2020-04-03T02:05:25Z lib64/

drwxrwxrwx 4096 2020-04-03T02:05:25Z local/

drwxr-xr-x 4096 2020-04-04T00:38:47Z logs/

-rw-r--r-- 2 2020-04-03T02:05:35Z ops.json

drwxr-xr-x 4096 2020-04-03T02:05:29Z proc/

drwxr-xr-x 4096 2020-04-03T02:05:25Z run/

drwxr-xr-x 12288 2020-04-03T02:05:25Z sbin/

drwxrwxrwx 60 2020-04-03T02:05:25Z secrets/

-rw-r--r-- 36175593 2020-04-03T02:05:29Z server.jar

-rw-r--r-- 940 2020-04-03T02:05:31Z server.properties

drwxr-xr-x 4096 2020-04-03T02:05:29Z sys/

dtrwxrwxrwx 4096 2020-04-03T02:05:35Z tmp/

-rw-r--r-- 111 2020-04-04T00:49:46Z usercache.json

drwxr-xr-x 4096 2020-04-03T02:05:25Z usr/

-rw-r--r-- 2 2020-04-03T02:05:35Z whitelist.json

drwxr-xr-x 4096 2020-04-06T12:23:55Z world/

Super. I can see two things here. server.jar has obviously been pulled down and eula.txt exists. This means our artifacts have been downloaded.

Logs

Without configuring log forwarding or anything else it is possible to access logs on a per allocation basis.

[00:38:47] [User Authenticator #1/INFO]: UUID of player anthonyburke is 208cae78-a7fb-43a6-83a5-1b5a682cf043

[00:38:47] [Server thread/INFO]: anthonyburke[/10.7.0.1:63138] logged in with entity id 443 at (-56.5, 63.0, 159.5)

[00:38:47] [Server thread/INFO]: anthonyburke joined the game

[00:40:00] [Server thread/INFO]: anthonyburke lost connection: Disconnected

[00:40:00] [Server thread/INFO]: anthonyburke left the game

[00:49:46] [User Authenticator #2/INFO]: UUID of player anthonyburke is 208cae78-a7fb-43a6-83a5-1b5a682cf043

[00:49:46] [Server thread/INFO]: anthonyburke[/10.7.0.1:63342] logged in with entity id 687 at (-59.69999998807907, 63.0, 158.69999998807907)

[00:49:46] [Server thread/INFO]: anthonyburke joined the game

[00:51:24] [Server thread/INFO]: anthonyburke lost connection: Disconnected

[00:51:24] [Server thread/INFO]: anthonyburke left the game

[01:14:31] [Server thread/WARN]: Can't keep up! Is the server overloaded? Running 2172ms or 43 ticks behind

Ooft! I already knew it was getting slammed but the logs confirmed for me my CPU and Memory reservations were a touch too low.

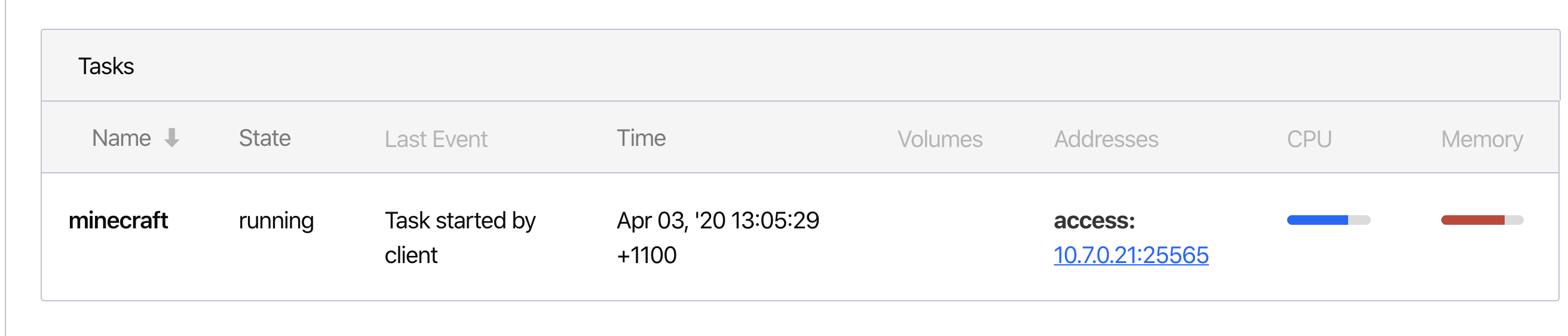

Validating access

The proof is in the client connecting right? Lets check out the port assigned to it.

The server has been scheduled at 10.7.0.21 and uses the static port we defined in our job file earlier. This can also be validated with nomad alloc status <allocid>. The output will list the address too if requested in the job file.

❯ nomad alloc status 6de4ea36

ID = 6de4ea36-dff9-ab36-ac9c-494fdcfe344f

Eval ID = 2ccde759

Name = minecraft.mc-server[0]

Node ID = 1adec88d

Node Name = docker1

Job ID = minecraft

Job Version = 0

Client Status = running

Client Description = Tasks are running

Desired Status = run

Desired Description = <none>

Created = 22h21m ago

Modified = 22h21m ago

Task "minecraft" is "running"

Task Resources

CPU Memory Disk Addresses

155/800 MHz 693 MiB/900 MiB 300 MiB access: 10.7.0.21:25565

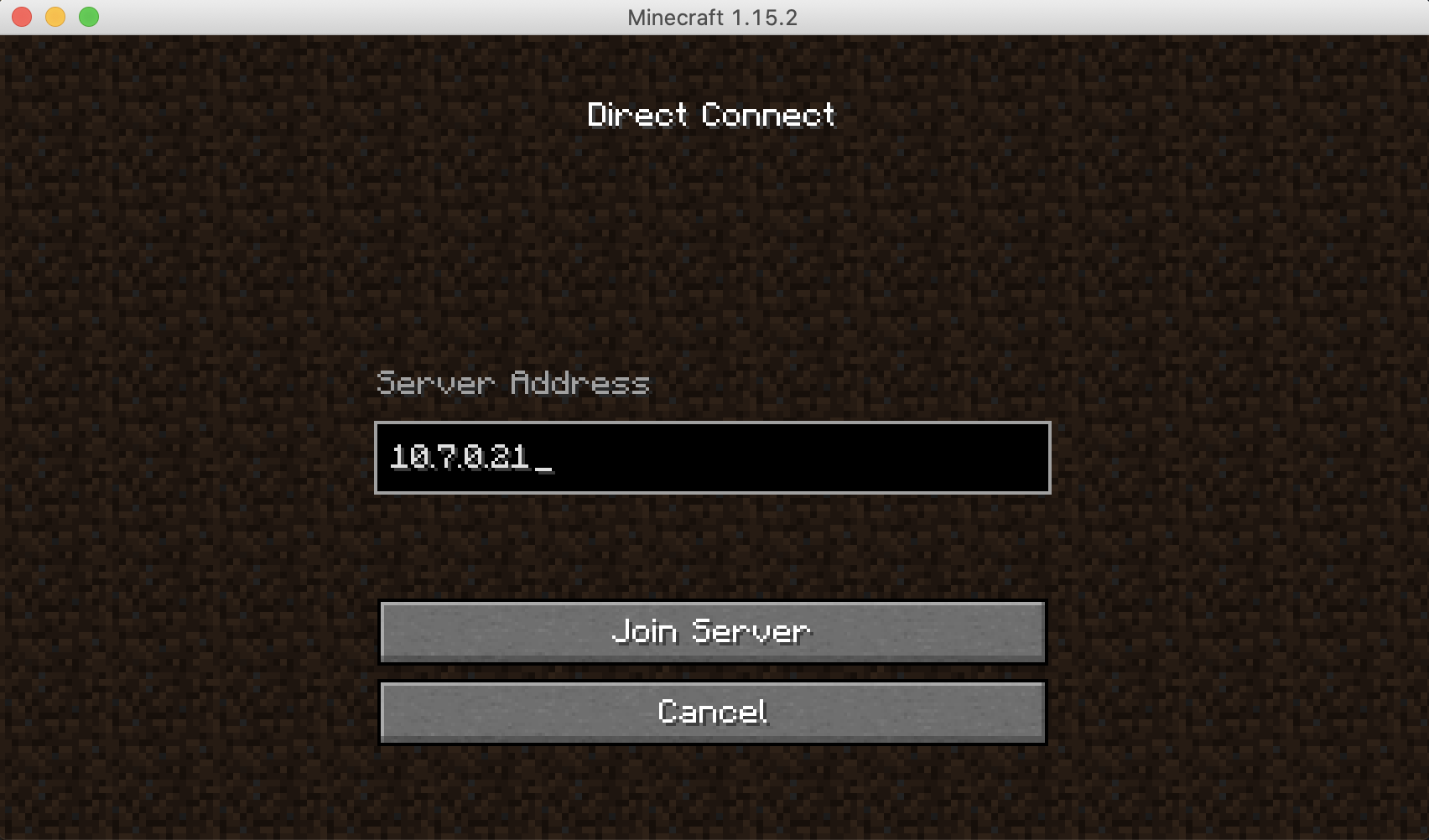

With the Minecraft Java client we boot into it, select Multiplayer, and Direct Connect to the IP address 10.7.0.21.

Join the Game and enjoy.

Improvements to make

This is an example of a working configuration but there are potential constraints or risks with my deployment and job file. They are topics I will cover in future parts:

- Artifacts - is there a way to ensure I can’t run

server.jarwithouteula.txtexisting? - Dealing with logs and log management for the server

- Persistent storage - any reschedule of the allocation to a different node will leave behind any persistent data. Need to look at a

CSIintegration in Nomad - Network connectivity - my client connect directly to the node and therefore any reschedule by Nomad will break the client.

- Version testing - Canary testing my Java application. For example - have clients connect to the “new” version of the application.

Conclusion

The net result is we have used Nomad to schedule a Java application. The scaffolding we wrote around the application artifact, Minecraft, resulted in us gaining benefits of application consolidation via binpacking. It also sets us up for further improvements in upcoming posts.

Stay tuned.

Burkey